For the last 18 months, Webtide engineers have been working on the most extensive overhaul of the Eclipse Jetty HTTP server and Servlet container since its inception in 1995. The headline for the release of Jetty 12.0.0 could be “Support for the Servlet 6.0 API from Jakarta EE 10“, but the full story is of a root and branch overhaul and modernization of the project to set it up for yet more decades of service.

This blog is an introduction to the features of Jetty 12, many of which will be the subject of further deep-dive blogs.

Servlet API independent

In order to support the Servlet 6.0 API, we took the somewhat counter intuitive approach of making Jetty Servlet API independent. Specifically we have removed any dependency on the Servlet API from the core Jetty HTTP server and handler architecture. This is taking Jetty back to it’s roots as it was Servlet API independent for the first decade of the project.

The Servlet API independent approach has the following benefits:

- There is now a set of

jetty-coremodules that provide a high performance and scalable HTTP server. Thejetty-coremodules are usable directly when there is no need for the Servlet API and the overhead introduced by it’s features and legacy. - For projects like Jetty, support must be maintained for multiple versions of the Servlet APIs. We are currently supporting branches for Servlet 3.1 in Jetty 9.4.x; Servlet 4.0 in Jetty 10.0.x; and Servlet 5.0 in Jetty 11.0.x. Adding a fourth branch to maintain would have been intolerable. With Jetty 12, our ongoing support for Servlet 4.0, 5.0 and 6.0 will be based on the same core HTTP server in the one branch.

- The Servlet APIs have many deprecated features that are no longer best practise. With Servlet 6.0, some of these were finally removed from the specification (e.g. Object Wrapper Identity). Removing these features from the Jetty core modules allows for better performance and cleaner implementations of the current APIs.

Multiple EE Environments

To support the Servlet APIs (and related Jakarta EE APIs) on top of the jetty-core, Jetty 12 uses an Environment abstraction that introduces another tier of class loading and configuration. Each Environment holds the applicable Jakarta EE APIs needed to provide Servlet support (but not the full suite of EE APIs).

Multiple environments can be run simultaneously on the same server and Jetty-12 supports:

- EE8 (Servlet 4.0) in the java.* namespace,

- EE9 (Servlet 5.0) in the jakarta.* namespace with deprecated features

- EE10 (Servlet 6.0) in the jakarta.* namespace without deprecated features.

- Core environments with no Servlet support or overhead.

Core Environment

jetty-core modules are now available for direct support of HTTP without the need for the overhead and legacy of the Servlet API. As part of this effort many API’s have been updated and refined:- The core Sessions are now directly usable

- A core Security model has been developed, that is used to implement the Servlet security model, but avoids some of the bizarre behaviours (I’m talking about you exposed methods!).

- The Jetty Websocket API has been updated and can be used over the top of the core Websocket APIs

- The Jetty HttpClient APIs have been updated.

Performance

Jetty 12 has achieved significant performance improvements. Our continuous performance tracking indicates that we have equal or better CPU utilisation for given load with lower latency and no long tail of quality of service.

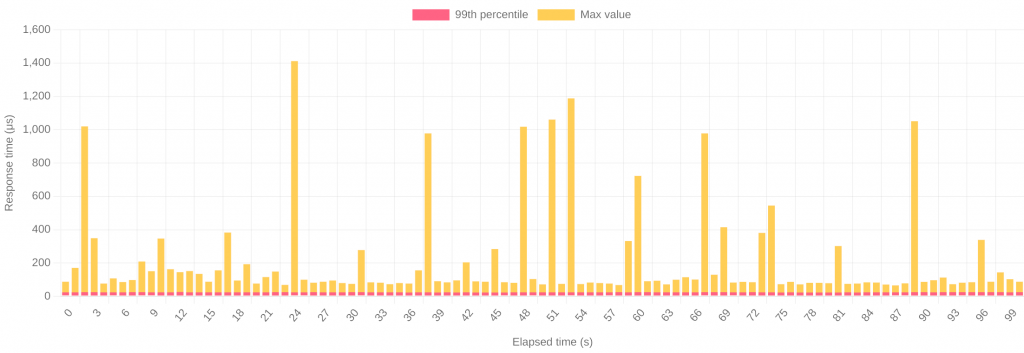

Our tests currently offer 240,000 requests per second and then measure quality of service by latency (99th percentile and maximum). Below is the plot of latency for Jetty 11:

This shows that the orange 99th percentile latency is almost too small in the plot to see (at 24.1 µs average), and all you do see is the yellow plot of the maximal latency (max 1400 µs). Whilst these peaks look large, the scale is in micro seconds, so the longest maximal delay is just over 1.4 milliseconds and 99% of requests are handled in 0.024ms!

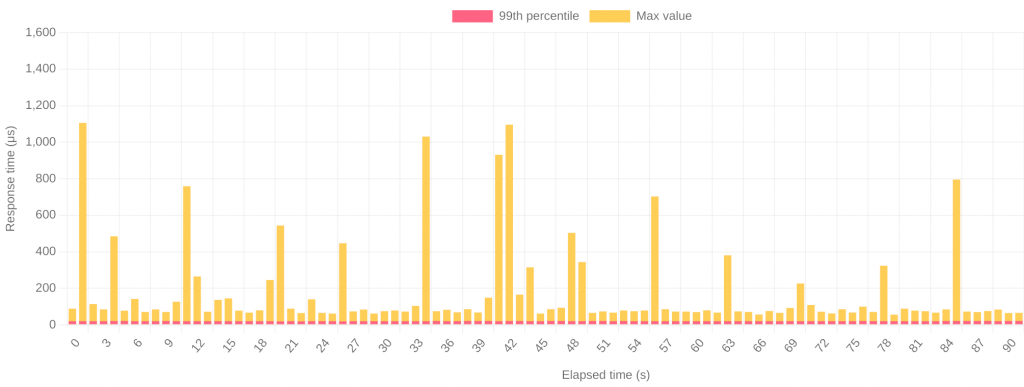

Below is the same plot of latency for Jetty 12 handling 240,000 requests per second:

The 99th percentile latency is now only 20.2 µs and the peaks are less frequent and rarely over 1 ms, with the maximum of 1100µs.

You can see the latest continuous performance testing of jetty-12 here.

New Asynchronous IO abstraction

jetty-core is a new asynchronous abstraction that is a significant evolution of the asynchronous approaches developed in Jetty over many previous releases.But “Loom” I hear some say. Why be asynchronous if “Loom” will solve all your problems. Firstly, Loom is not a silver bullet, and we have seen no performance benefits of adopting Loom in the core of Jetty. If we were to adopt loom in the core we’d lose the significant benefits of our advanced execution strategy (which ensures that tasks have a good chance of being executed on a CPU core with a hot cache filled with the relevant data).

However, there are definitely applications that will benefit from the simple scaling offered by Loom’s virtual Threads, thus Jetty has taken the approach to stay asynchronous in the core, but to have optional support of Loom in our Execution strategy. Virtual threads may be used by the execution strategy, rather than submitting blocking jobs to a thread pool. This is a best of both worlds approach as it let’s us deal with the highly complex but efficient/scaleable asynchronous core, whilst letting applications be written in blocking style but can still scale.

But I hear other say: “why yet another async abstraction when there are already so many: reactive, Flow, NIO, servlet, etc”? Adopting a simple but powerful core async abstraction allows us to simply adapt to support many other abstractions: specifically Servlet asynchronous IO, Flow and blocking InputStream/OutputStream are trivial to implement. Other features of the abstraction are:

- Input side can be used iteratively, avoiding deep stacks and needless dispatches. Borrowed from Servlet API.

- Demand API simplified from Flow/Reactive

- Retainable ByteBuffers for zero copy handling

- Content abstraction to simply handle errors and trailers inline.

The asynchronous APIs are available to be used directly in jetty-core, or applications may simply wrap them in alternative asynchronous or blocking APIs, or simply use Servlets and never see them (but benefit from them).

Below is an example of using the new APIs to asynchronously read content from a Content.Source into a string:

public static class FutureString extends CompletableFuture<String> {

private final CharsetStringBuilder text;

private final Content.Source source;

public FutureString(Content.Source source, Charset charset) {

this.source = source;

this.text = CharsetStringBuilder.forCharset(charset);

source.demand(this::onContentAvailable);

}

private void onContentAvailable() {

while (true) {

Content.Chunk chunk = source.read();

if (chunk == null) {

source.demand(this::onContentAvailable);

return;

}

try {

if (Content.Chunk.isFailure(chunk))

throw chunk.getFailure();

if (chunk.hasRemaining())

text.append(chunk.getByteBuffer());

if (chunk.isLast() && complete(text.build()))

return;

} catch (Throwable e) {

completeExceptionally(e);

} finally {

chunk.release();

}

}

}

}

The asynchronous abstraction will be explained in detail in a later blog, but we will note about the code above here:

- there are no data copies into buffers (as if often needed with

read(byte[]buffer)style APIs. The chunk may be a slice of a buffer that was read directly from the network and there areretain()andrelease()to allow references to be kept if need be. - All data and meta flows via pull style calls to the

Content.Source.read()method, including bytes of content, failures and EOF indication. Even HTTP trailers are sent asChunks. This avoids the mutual exclusion that can be needed if there are onData and onError style callbacks. - The read style is iterative, so there is no less need to break down code into multiple callback methods.

- The only callback is to the

onContentAvailablemethod that is passed toContent.Source#demand(Runnable)and is called back when demand is met (i.e. read can be called with a non null return).

Handler, Request & Response design

The core building block of a Jetty Server are the Handler, Request and Response interfaces. These have been significantly revised in Jetty 12 to:

- Fully embrace and support the asynchronous abstraction. The previous Handler design predated asynchronous request handling and thus was not entirely suitable for purpose.

- The Request is now immutable, which solves many issues (see “Mutable Request” in Less is More Servlet API) and allows for efficiencies and simpler asynchronous implementations.

- Duplication has been removed from the API’s so that wrapping requests and responses is now simpler and less error prone. (e.g. There is no longer the need to wrap both a

sendErrorandsetStatusmethod to capture the response status).

Here is an example Handler that asynchronously echos all a request content back to the response, including any Trailers:

public boolean handle(Request request, Response response, Callback callback) {

response.setStatus(200);

long contentLength = -1;

for (HttpField field : request.getHeaders()) {

if (field.getHeader() != null) {

switch (field.getHeader()) {

case CONTENT_LENGTH -> {

response.getHeaders().add(field);

contentLength = field.getLongValue();

}

case CONTENT_TYPE -> response.getHeaders().add(field);

case TRAILER -> response.setTrailersSupplier(HttpFields.build());

case TRANSFER_ENCODING -> contentLength = Long.MAX_VALUE;

}

}

}

if (contentLength > 0)

Content.copy(request, response, Response.newTrailersChunkProcessor(response), callback);

else

callback.succeeded();

return true;

}

Security

With sponsorship from the Eclipse Foundation and the Open Source Technology Improvement Fund, Webtide was able to engage Trail of Bits for a significant security collaboration. There have been 25 issues of various severity discovered, including several which have resulted in CVEs against the previous Jetty releases. The Jetty project has a good security record and this collaboration is proving a valuable way to continue that.

Big update & cleanup

Jetty is a 28 year old project. A bit of cruft and legacy has accumulated over that time, not to mention that many RFCs have been obsoleted (several times over) in that period.

The new architecture of Jetty 12, together with the name space break of jakarta.* and the removal of deprecated features in Servlet 6.0, has allowed for a big clean out of legacy implementations and updates to the latest RFCs.

Legacy support is still provided where possible, either by compliance modes selecting older implementations or just by using the EE8/EE9 Environments.

Conclusion

The Webtide team is really excited to bring Jetty 12 to the market. It is so much more than just a Servlet 6.0 container, offering a fabulous basis for web development for decades more to come.

1 Comment

gregw · 07/08/2023 at 22:23

Jetty 12.0.0 is now live on maven central

Comments are closed.