Jetty 9.1.0 has entered round 8 of the Techempower’s Web Framework Benchmarks. These benchmarks are a comparison of over 80 framework & server stacks in a variety of load tests. I’m the first one to complain about unrealistic benchmarks when Jetty does not do well, so before crowing about our good results I should firstly say that these benchmarks are primarily focused at frameworks and are unrealistic benchmarks for server performance as they suffer from many of the failings that I have highlighted previously (see Truth in Benchmarking and Lies, Damned Lies and Benchmarks).

But I don’t want to bury the lead any more than I have already done, so I’ll firstly tell you how Jetty did before going into detail about what we did and what’s wrong with the benchmarks.

What did Jetty do?

Jetty has initially entered the JSON and Plaintext benchmarks:

- Both tests are simple requests and trivial requests with just the string “Hello World” encode either as JSON or plain text.

- The JSON test has a maximum concurrency of 256 connections with zero delay turn around between a response and the next request.

- The plaintext test has a maximum concurrency of 16,384 and uses pipelining to run these connections at what can only be described as a pathological work load!

How did Jetty go?

At first glance at the results, Jetty look to have done reasonably well, but on deeper analysis I think we did awesomely well and an argument can be made that Jetty is the only server tested that has demonstrated truly scalable results.

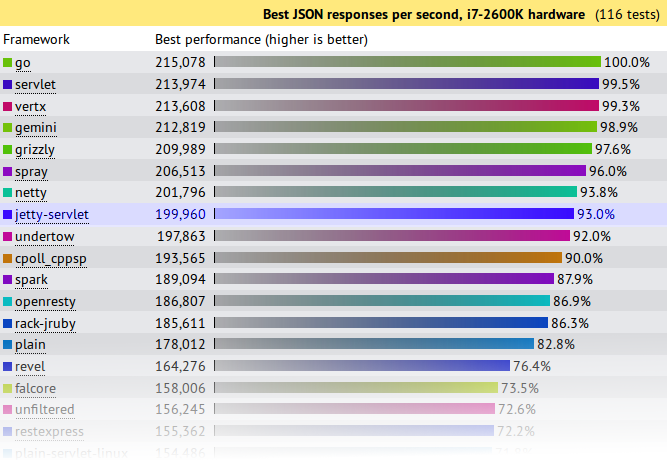

JSON Results

Jetty came 8th from 107 and achieved 93% (199,960 req/s) of the first place throughput. A good result for Jetty, but not great. . . . until you plot out the results vs concurrency:

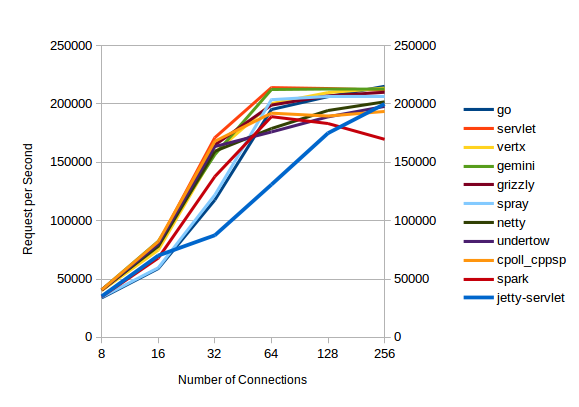

All the servers with high throughputs have essentially maxed out at between 32 and 64 connections and the top servers are actually decreasing their throughput as concurrency scales from 128 to 256 connections.

Of the top throughput servlets, it is only Jetty that displays near linear throughput growth vs concurrency and if this test had been extended to 512 connections (or beyond) I think you would see Jetty coming out easily on top. Jetty is investing a little more per connection, so that it can handle a lot more connections.

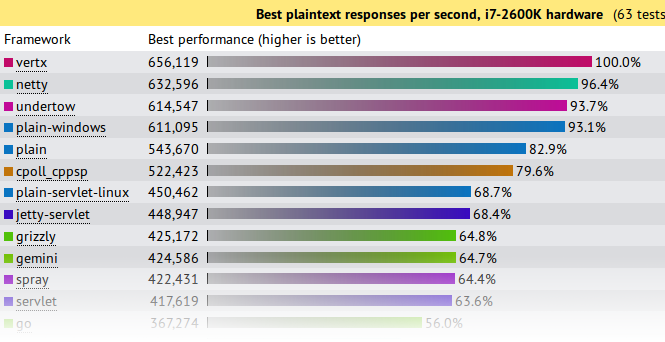

Plaintext Results

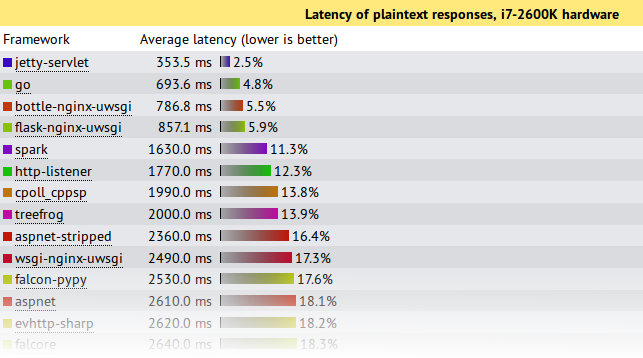

First glance again is not so great and we look like we are best of the rest with only 68.4% of the seemingly awesome 600,000+ requests per second achieved by the top 4. But throughput is not the only important metric in a benchmark and things look entirely different if you look at the latency results:

This shows that under this pathological load test, Jetty is the only server to send responses with an acceptable latency during the onslaught. Jetty’s 353.5ms is a workable latency to receive a response, while the next best of 693ms is starting to get long enough for users to register frustration. All the top throughput servers have average latencies of 7s or more!, which is give up and go make a pot of coffee time for most users, specially as your average web pages needs >10 requests to display!

Note also that these test runs were only over 15s, so servers with 7s average latency were effectively not serving any requests until the onslaught was over and then just sent all the responses in one great big batch. Jetty is the only server to actually make a reasonable attempt at sending responses during the period that a pathological request load was being received.

If your real world load is anything vaguely like this test, then Jetty is the only server represented in the test that can handle it!

What did Jetty do?

The jetty entry into these benchmarks has done nothing special. It is out of the box configuration with trivial implementations based on the standard servlet API. More efficient internal Jetty API have not been used and there has been no fine tuning of the configuration for these tests. The full source is available, but is presented in summary below:

public class JsonServlet extends GenericServlet

{

private JSON json = new JSON();

public void service(ServletRequest req, ServletResponse res)

throws ServletException, IOException

{

HttpServletResponse response= (HttpServletResponse)res;

response.setContentType("application/json");

Map<String,String> map =

Collections.singletonMap("message","Hello, World!");

json.append(response.getWriter(),map);

}

}

The JsonServlet uses the Jetty JSON mapper to convert the trivial instantiated map required of the tests. Many of the other frameworks tested use Jackson which is now marginally faster than Jetty’s JSON, but we wanted to have our first round with entirely Jetty code.

public class PlaintextServlet extends GenericServlet

{

byte[] helloWorld = "Hello, World!".getBytes(StandardCharsets.ISO_8859_1);

public void service(ServletRequest req, ServletResponse res)

throws ServletException, IOException

{

HttpServletResponse response= (HttpServletResponse)res;

response.setContentType(MimeTypes.Type.TEXT_PLAIN.asString());

response.getOutputStream().write(helloWorld);

}

}

The PlaintextServlet makes a concession to performance by pre converting the string array to bytes, which is then simply written out the output stream for each response.

public final class HelloWebServer

{

public static void main(String[] args) throws Exception

{

Server server = new Server(8080);

ServerConnector connector = server.getBean(ServerConnector.class);

HttpConfiguration config = connector.getBean(HttpConnectionFactory.class).getHttpConfiguration();

config.setSendDateHeader(true);

config.setSendServerVersion(true);

ServletContextHandler context =

new ServletContextHandler(ServletContextHandler.NO_SECURITY|ServletContextHandler.NO_SESSIONS);

context.setContextPath("/");

server.setHandler(context);

context.addServlet(org.eclipse.jetty.servlet.DefaultServlet.class,"/");

context.addServlet(JsonServlet.class,"/json");

context.addServlet(PlaintextServlet.class,"/plaintext");

server.start();

server.join();

}

}

The servlets are run by an embedded server. The only configuration done to the server is to enable the headers required by the test and all other settings are the out-of-the-box defaults.

What’s wrong with the Techempower Benchmarks?

While Jetty has been kick-arse in these benchmarks, let’s not get carried away with ourselves because the tests are far from perfect, specially for these two tests which are not testing framework performance (the primary goal of the techempower benchmarks) :

- Both have simple requests that have no information in them that needs to be parsed other than a simple URL. Realistic web loads often have session and security cookies as well as request parameters that need to be decoded.

- Both have trivial responses that are just the string “Hello World” with minimal encoding. Realistic web load would have larger more complex responses.

- The JSON test has a maximum concurrency of 256 connections with zero delay turn around between a response and the next request. Realistic scalable web frameworks must deal with many more mostly idle connections.

- The plaintext test has a maximum concurrency of 16,384 (which is a more realistic challenge), but uses pipelining to run these connections at what can only be described as a pathological work load! Pipelining is rarely used in real deployments.

- The tests appear to run only for 15s. This is insufficient time to reach steady state and it is no good your framework performing well for 15s if it is immediately hit with a 10s garbage collection starting on the 16th second.

But let me get off my benchmarking hobby-horse, as I’ve said it all before: Truth in Benchmarking, Lies, Damned Lies and Benchmarks.

What’s good about the Techempower Benchmarks?

- There are many frameworks and servers in the comparison and whatever the flaws are, then are the same for all.

- The test appear to be well run on suitable hardware within a controlled, open and repeatable process.

- Their primary goal is to test core mechanism of web frameworks, such as object persistence. However, jetty does not provide direct support for such mechanisms so we have initially not entered all the benchmarks.

Conclusion

Both the JSON and plaintext tests are busy connection tests and the JSON test has only a few connections. Jetty has always prioritized performance for the more realistic scenario of many mostly idle connections and this has shown that even under pathological loads, jetty is able to fairly and efficiently share resources between all connections.

Thus it is an impressive result that even when tested far outside of it’s comfort zone, Jetty-9.1.0 has performed at the top end of this league table and provided results that if you look beyond the headline throughput figures, presents the best scalability results. While the tested loads are far from realistic, the results do indicate that jetty has very good concurrency and low contention.

Finally remember that this is a .0 release aimed at delivering the new features of Servlet 3.1 and we’ve hardly even started optimizing jetty 9.1.x

1 Comment

brad · 24/12/2013 at 06:33

I agree that these synthetic benchmarks can be misleading. Furthermore, people seem to really get caught up in who is in one or two positions ahead of the other….but look at the absolute numbers most of the frameworks are producing! The raw numbers tell me I should not be judging most of these frameworks by performance at all and should be assessing frameworks based on other criteria. I mean, is someone going to drop a framework that delivers 10x what anyone would sanely relegate to a single server for one that delivers 12x? Look at lowly rails, consistently near the bottom of the list in every iteration of the techempower benchmark, yet rails is just fine for even moderately busy sites like github.