“Project Loom aims to drastically reduce the effort of writing, maintaining, and observing high-throughput concurrent applications that make the best use of available hardware. … The problem is that the thread, the software unit of concurrency, cannot match the scale of the application domain’s natural units of concurrency — a session, an HTTP request, or a single database operation. … Whereas the OS can support up to a few thousand active threads, the Java runtime can support millions of virtual threads. Every unit of concurrency in the application domain can be represented by its own thread, making programming concurrent applications easier. Forget about thread-pools, just spawn a new thread, one per task.” – Ron Pressler, State of Loom, May 2020

Project Loom brings virtual threads (back) to the JVM in an effort to reduce the effort of writing high-throughput concurrent applications. Loom has generated a fair bit of interest with claims that Asynchronous APIs may no longer be necessary for things like Futures, JDBC, DNS, Reactive, etc. So since Loom is now available in OpenJDK 16 early access includes, we thought it was a good time to test out some of the amazing claims that have been made for Duke‘s new opaque clothing that has been woven by Loom! Spoiler – Duke might not be naked, but its attire could be a tad see-through!

All the code from this blog is available in our loom-trial project and has been run on my dev machine (Intel® Core™ i7-6820HK CPU @ 2.70GHz × 8, 32GB memory, Ubuntu 20.04.1 LTS 64-bit, OpenJDK Runtime Environment (build 16-loom+9-316)) with no specific tuning and default settings unless noted otherwise.

Some History

We started writing what would become Eclipse Jetty in 1995 on Java 0.9. For its first decade, Jetty was a blocking server using a thread per request and then a thread per connection, and large thread pools (sometimes many thousands) were sufficient to handle almost all the loads offered.

However, there were a few deployments that wanted more parallelism, plus the advent of virtual hosting meant that servers were often sharing physical machines with other server instances, all trying to pre-allocate max resources in their idle thread pools to handle potential load spikes.

Thus there was some demand for async and so Jetty-6 in 2006 introduced some asynchronous I/O. Yet it was not until Jetty-9 in 2012 that we could say that Jetty was fully asynchronous through the container and to the application and we still fight with the complexity of it today.

Through this time, Java threads were initially implemented by Green Threads and there were lots of problems of live lock, priority inversion, etc. It was a huge relief when native threads were introduced to the JVM and thus we were a little surprised at the enthusiasm expressed for Loom, which appears to be a revisit of late-stage MxN Green Threads and suffers from at least some similar limitations (e.g. the CPUBound test demonstrates that the lack of preemption makes virtual tasks unsuitable for CPU bound tasks). This paper from 2002 on Multithreading in Solaris gives an excellent background on this subject and describes the switch from the MxN threading to 1:1 native threads with terms like “better scalability”, “simplicity”, “improved quality” and that MxN had “not quite delivered the anticipated benefits”. Thus we are really interested to find out what is so different this time around.

The Jetty team has a near-unique perspective on the history of both Java threading and the development of highly concurrent large throughput Java applications, which we can use to evaluate Loom. It’s almost like we were frozen in time for decades to bring back our evil selves from the past 🙂

One Million Threads!

That’s a lot of threads and it is a claim that is really easy to test! Here is an extract from MaxVThreads:

CountDownLatch hold = new CountDownLatch(1);

while (threads.size() < 1_000_000)

{

CountDownLatch started = new CountDownLatch(1);

Thread thread = Thread.builder().virtual().task(() ->

{

try

{

started.countDown();

hold.await();

}

catch (InterruptedException e)

{

e.printStackTrace();

}

}).start();

threads.add(thread);

started.await();

System.err.printf("%s: %,d%n", thread, threads.size());

}

Which we ran and got:

... VirtualThread[@244165d6,...]: 999,998 VirtualThread[@6f40da3b,...]: 999,999 VirtualThread[@1cfca01c,...]: 1,000,000

Async is Dead!!!

Long live Loom!!!

Lunch is Free!!!

Bullets are Silver!!!

But maybe we should look a little deeper before we throw out decades of painfully acquired asynchronous Java programming experience?

So what can Kernel Threads do?

Easy to test, just take out the .virtual() and you get MaxKThreads, which runs with:

... Thread[Thread-32603,5,main]: 32,604 Thread[Thread-32604,5,main]: 32,605 Thread[Thread-32605,5,main]: 32,606 OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00007feba8e90000, 16384, 0) failed; error='Not enough space' (errno=12) Exception in thread "main" java.lang.OutOfMemoryError: unable to create native thread: possibly out of memory or process/resource limits reached at java.base/java.lang.Thread.start0(Native Method) at java.base/java.lang.Thread.start(Thread.java:1711) at java.base/java.lang.Thread$Builder.start(Thread.java:956) at org.webtide.loom.MaxKThreads.main(MaxKThreads.java:27) OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00007ff4de073000, 16384, 0) failed; error='Not enough space' (errno=12)

Well, that certainly is a lot less than the number of Loom virtual threads! Two orders of magnitude at least! But 32k threads is still a lot of threads and that limit is actually an administrative one so it is definitely possible to tune and increase the number of kernel threads. But let’s take it at face value because whilst you might get one order of magnitude more kernel threads, no amount of tuning is going to get 1,000,000 kernel threads.

So it is 1,000,000 vs 32,000! Surely that is that game over? Perhaps, but let’s look a little closer before we call it.

How is that even possible?

Given that the default stack size for Java on my machine is 1 MiB, how is it even possible to have 1,000,000 threads as that would be 1000 GiB of stack?

The answer is that, unlike kernel threads which allocate the entire virtual memory requirement for a stack when a thread is started, virtual threads have more dynamic management of threads and they must not be allocating stack space until it is needed.

So Loom has been able to allocate 1,000,000 virtual threads on my laptop because my test was using minimal stack depths!

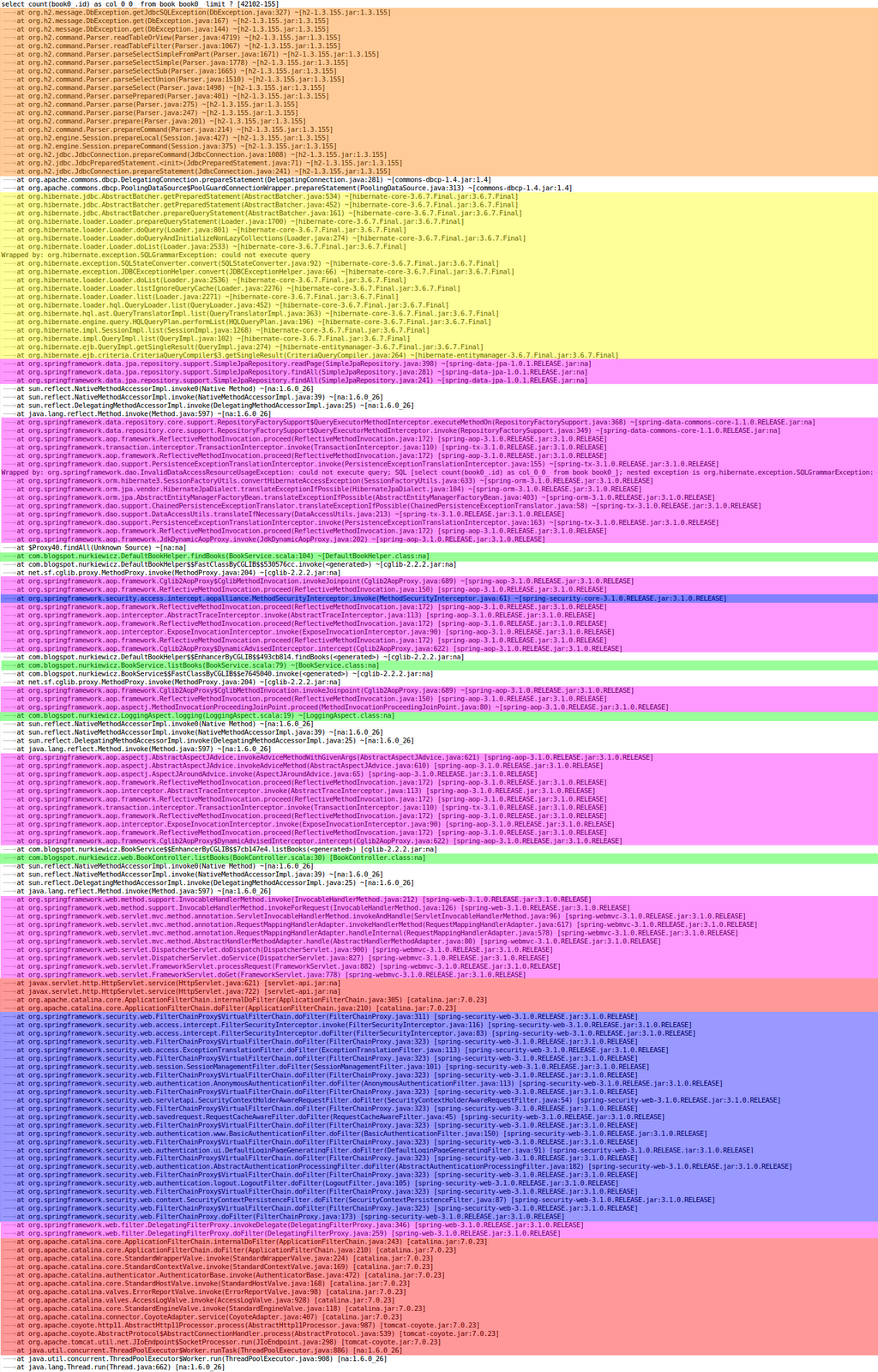

This is not very realistic as the current trend is for deeper and deeper stacks, such that we routinely now have to deal with the Stack Trace From Hell!

So we think we need to repeat our experiments with some non-trivial stacks and see what we get!

Non Trivial Stacks

Recursion is a good way to potentially blow up any stack and we have used a contrived example of a “genetic algorithm” in DnaStack to simple generate some deep stacks with a mix of fields on the stack and objects on the heap that are referenced from the stack. It is not very representative of most applications, but we think it will do for this initial investigation.

Simply running this test until stack overflow will reveal how deep our stacks can go, so that MaxStackDepth reports:

kthread maxDepth: ave:2,478 from:2 min:1,440 max:3,516 vthread maxDepth: ave:2,134 from:2 min:1,971 max:2,297

However, as smart as we think that we are at writing contrived recursive examples, the writers of the JVM JIT are smarter and after a period of warming, they must have found loops to unwind, tails to iterate and/or things to move on/off the stack, so that ultimately my test reports:

kthread maxDepth: ave:4,564 from:2 min:4,537 max:4,591 vthread maxDepth: ave:4,530 from:2 min:4,497 max:4,563

So both types of thread can support very deep stacks (or at least deep stacks with whatever fields my test managed to keep on the stack).

However, for further testing, let’s not be too extreme and restrict our runs of this test to 1000 deep fake DNA protein stacks, tested only after warming up the JIT.

Threads With Non-Trivial Stacks?

So how do virtual and kernel threads go when using nonempty stacks? The test MaxDeepKThreads attempts to allocate 1,000,000 threads with 1000 deep stacks, but it fails at this point:

... 32,595: memory=3,707,764,736 : Thread[Thread-33593,5,main] 32,596: memory=3,707,764,736 : Thread[Thread-33594,5,main] 32,597: memory=3,707,764,736 : Thread[Thread-33595,5,main] OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00007f4a89e60000, 16384, 0) failed; error='Not enough space' (errno=12) # # There is insufficient memory for the Java Runtime Environment to continue.

So with deep stacks, kernel threads reached exactly the same 32k limit.

This makes sense as kernel threads pre-allocate their stacks and thus the limit equally applies to shallow or deep stacks. So the limit applies regardless if the stacks are used or not (yes – this suggests we can tune our stacks to be smaller, but let’s not go there)!

This is how Loom goes with MaxDeepVThreads:

... 26,930: memory=5,922,357,248 : VirtualThread[@2f3c7b24,...] SLOW 1,547ms ... 55,230: memory=8,409,579,520 : VirtualThread[@62c95d73,...] 55,231: memory=8,409,579,520 : VirtualThread[@2abbe420,...] 55,232: memory=8,409,579,520 : VirtualThread[@3210c2de,...] SLOW 35,083ms TOO SLOW!!!

Only 26K threads before a bad 1.5 s garbage collection and then at 55K threads a 35 s garbage collection with all 8.4GB of memory consumed killed the test in a most ungraceful way. This is not the 1,000,000 threads claimed as is the same order of magnitude as kernel threads.

The kernel threads used 3.7GB/32K = 114KB/thread of heap memory, whilst the Loom virtual threads used 8.4GB/55K = 152KB/thread of heap. The additional heap memory usage for virtual threads appears to be due to the stacks being stored on the heap rather than in kernel memory. Indeed investigating with jcmd $pid VM.native_memory (along with adding -XX:NativeMemoryTracking=summary on the command line of the JVM to test) reveals that the kernel threads had an additional 8.3GB of stack memory committed (257KB/thread) of which we cannot tell how much is actually used. We are not sure either style of memory usage can be said to be better, rather they are just different:

- Kernel stacks are typically over-allocated and once allocated cannot be freed.

- Heap stacks can be allocated and freed dynamically with garbage collection.

- Kernel stacks are simple contiguous virtual memory data structures with good locality of reference and simple algorithms

- Heap stacks are distributed in heap space and require more complex management and add stress to the garbage collector.

- Kernel stacks are allocated in quanta that can have significant unused space.

- Heap stacks can be allocated as needed within the heap, which itself is allocated in quanta, but those are shared with other heap usages.

Of course, both of these tests could be tuned with heap sizes, stack sizes, etc. but the key point is that both approaches are both more or less limited by memory and are both the same order of magnitude. Both tests achieve 10,000s of threads and both could probably peak above 100,000 with careful tuning. Neither is hitting 100,000s let alone a 1,000,000.

Conclusion (part 1)

Loom does allow you to have many threads, even 1,000,000 threads, but not if those threads have deep stacks. It appears to increase total memory usage for stack entries and also comes at a cost of long garbage collections. These are significant limitations on virtual threads, so they are not a Silver Bullet solution that can be used as a drop-in replacement for kernel threads. Loom is effectively running the same “business model” as gyms: they sell many more memberships than they have the capacity to simultaneously service. If every gym member wanted to join a spin class at the same time, the result would be much the same as every Loom virtual thread trying to use its stack at once. Gyms can be very successful, so it may well be that there are use-cases that will benefit from Loom and that Duke is not naked, it’s just that its new clothes are see-through lycra for going to the gym. We see more in part 2.

3 Comments

Thomas · 04/01/2021 at 16:21

I guess the new virt-threads discussion is heated by v-threads as presented in specialized runtimes like go. The idea is of course, that java can do the same. But with go, it is deeply build into the language and runtime, not just an add-on.

Interesting approach, anyway 🙂

gregw · 04/01/2021 at 18:01

Thomas,

Indeed things are always simpler if you don’t change direction a few times as you develop a language. I think these blogs have been seen by some as negative commentary on Loom, where I think they are more a cautionary tail that there is often no simple fix for code that is already heavily dependent on particular styles in Java. But that’s not to say that Java can’t be used to write new things in styles similar to Go, nor to adapt existing code to those approaches… it’s just not a simple as if you started from there in the first place!

gregw · 05/01/2021 at 15:22

More good discussion at https://mail.openjdk.java.net/pipermail/loom-dev/2021-January/001976.html

Comments are closed.